Please tell me a bit about yourself – what’s your background and what do you do when you’re not making AI art?

My name is Sam, and I’m a UK national who recently graduated from a college in Chicago. Outside of taking commissions for art and managing both the @images_ai account and a bandcamp music label called Abstract Image Records, I produce a lot of music, run D&D sessions, and watch horror movies. During my degree, I focused mostly on Existentialism in Lovecraftian Fiction, Epistemic Injustice, and the Philosophies of Art and Fascism.

I think your Twitter account was my first encounter with AI art just over a year ago – I followed your guide to VQGAN+CLIP and was immediately hooked. How did you first get started and what led you to set up your Twitter account?

images_ai: Also through Twitter. If you know your Vaporwave, you will have heard of a label called DMT Tapes FL. It’s the biggest music label in the genre. I followed their account and noticed that the guy behind the label - Vito - was posting these weird, hallucinogenic low-res renders, which after a bit of prying I tracked down to The Big Sleep, the BigGAN/CLIP Colab that Ryan Murdock put together. They had a really melancholic, lo-fi texture that I still adore, like old photographs on expired film. I played around with it for a bit and then sort of gave up, assuming it was an interesting novelty.

Then, about a month later, I saw Vito posting some images that were radically different, and decided to check out VQGAN+CLIP by Katherine Crowson, which was partially inspired by The Big Sleep. After revisiting Big Sleep and getting to know VQGAN, I realized that 1. this new style of image creation had some really serious artistic potential (I still don’t think there’s a human on Earth capable of emulating the style of VQGAN successfully), and 2. neither of the developers, Ryan or Katherine, had more than a few thousand followers on Twitter, which seemed crazy considering how revolutionary their artwork was. So, I set up a new Twitter account on June 18th, posted some of my initial Big Sleep experiments, and then started regularly uploading VQGAN content of my own and retweeting the work of other artists and developers, with the specific intention of promoting and platforming art created by AI machines to raise their profile.

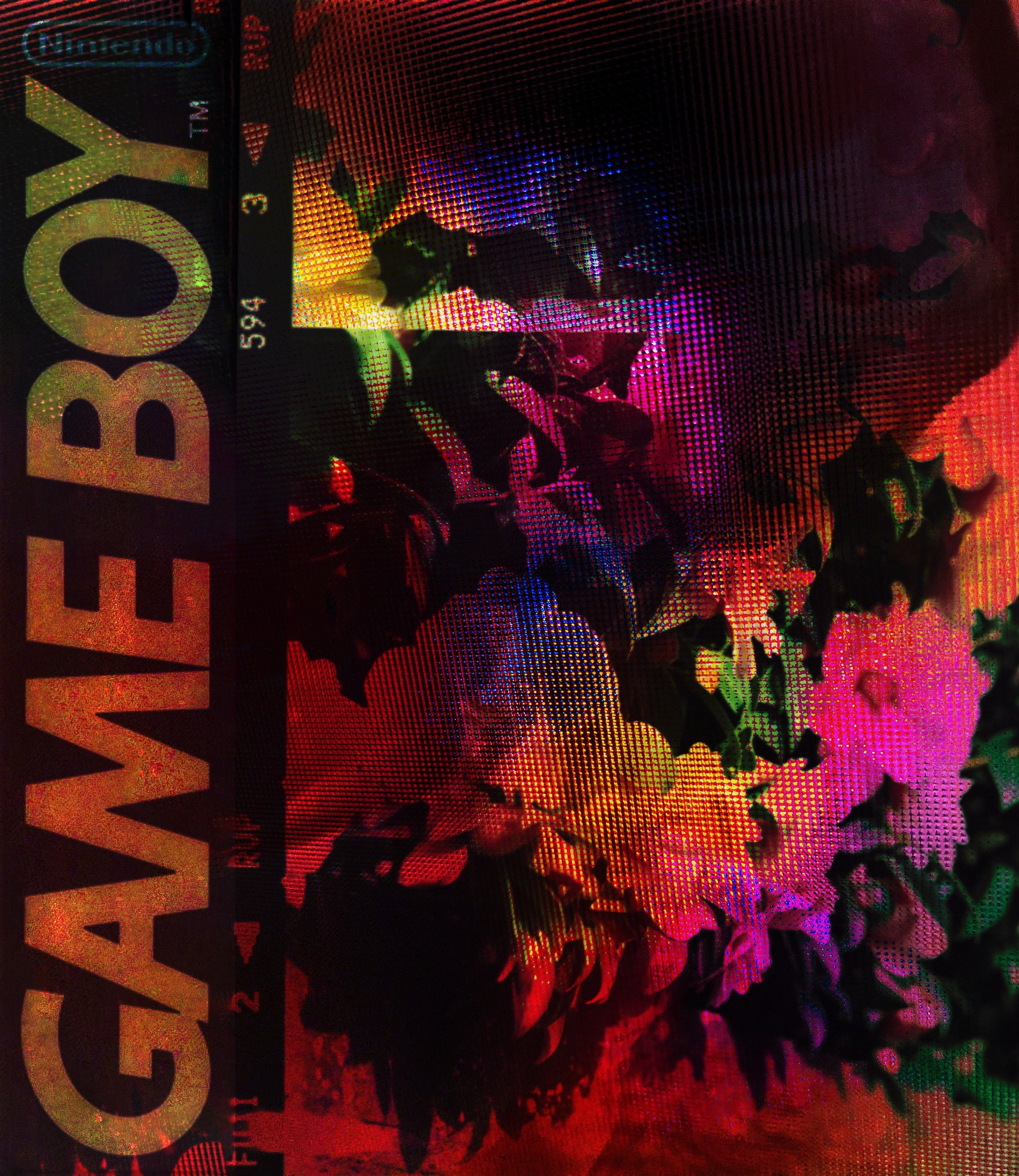

↑ The first Big Sleep images posted on @images_ai, with the text caption 'mono no aware'.

I sent Vito a DM stating my intentions, and he was kind enough to promote a lot of my work, which was enough to net me an initial following that snowballed dramatically in the subsequent days. We hit 40,000 followers within two months - even today, more than 90% of the platform comes from people who followed in July 2021. I’ve been trying to continue that project ever since. It probably succeeded.

How have things changed since you first started, and has it changed anything for you specifically?

images_ai: The primary difference between then and now is that I hopped on the hype train immediately before this stuff got huge, so it transformed from a reasonably low-key community of artists and researchers on Twitter to a massive - but still quite insular - community of traditional artists, new artists, researchers, journalists, hobbyists, and haters very quickly.

Within that community, only a handful (such as yourself) have actually been able to form a distinctive and particular art style using these tools, which isn’t surprising - the same happens with every art scene and every new art technology. Obviously the tech itself is evolving at a rapid pace, and it seems the dominant players in that field have transitioned from indie programmers into collectives and companies like Midjourney and Stability. Alongside that, I think a lot of the appeal has also changed. The 'wow! An AI made this?!' factor never went away, although the adjustment from GANs to increasingly massive diffusion models has meant that different generators are becoming less and less distinctive by themselves, so what an artist chooses to do with their generations, and how they want to present them has come more into the fore.

On a personal level, it hasn’t changed a whole lot. I take commissions whenever they come in, although they’re few and far between. In real life, the account is mostly just a personal novelty to my friends and family. It’s important to remember that in the real world, very few people actually know about this stuff, and the vast majority of those who have seen it just think it’s a kind of cool, kind of novel thing. Almost nobody has strong feelings about it, positively or negatively.

How would you describe what you do and what are you working on right now?

images_ai: When it comes to the artwork I post, it’s always been a combination of raw experiments from new software (Big Sleep, VQGAN+CLIP, CLIP-Guided Diffusion, Disco Diffusion, Midjourney, DALL-E, Stability Diffusion, Kali Yuga’s Diffusion Models, @ai_curio’s Looking Glass etc.) and more refined pieces that I put together in Photoshop.

I think I’m a little bit on the outside of the community in that sense, because most of my 'professional' pieces involve using AI generation for assets to collage into other stuff, combined with real life photographs, overlays, and text. In that sense, I think about it essentially as an all-digital form of Mixed Media, but instead of combining painting with sculpture and collage, I’m combining material from various AI generators, upscalers, and inpainters with digital painting, collage, written text, and music.

↑ Signs of Life.

I love extremes, and layering all kinds of shit together to find the unique shapes that form in the spaces between them, it’s a lot of minimalism and a lot of maximalism. Considering my artistic background is in music and especially DAW composition, this shouldn’t be surprising. I basically took my approach to producing Dance Music - make loops, layer them, add some, subtract some, morph them over time - and applied it to visual art.

↑ Lord of Noise.

Most of my influence comes from horror fiction and music that plays with hauntology - stuff like Vaporwave, billy woods, Burial, Lingua Ignota, Moor Mother. How do we emulate the past in our lives and in our art, consciously and subconsciously? What effect will this have on our future? In particular, I’ve been interested in examining which features of the cultural lexicon go explored and unexplored within these art communities themselves. What are the ethical and cultural implications of making artwork with tech that might put someone else out of a job? How do we embrace or reject the fungibility and capacity for emulation inherent to both AI tools and the Internet in general? How do we as explorers of a latent space that represents, to some degree, a cross-section of the modern Internet reckon with the biases and prejudices embedded within the Internet itself?

↑ Destined Death.

I think AI Art and Internet Art in general have a really serious Orientalism problem that hasn’t been dealt with. While the ideas of ‘liminality’ and ‘liminal spaces’ have been stripped of most meaning by reddit aesthetic pages, the relationship between nostalgia, fear, prejudice and death has always been fascinating to me. Employing these liminal, latent spaces that carry so many artistic, political, and cultural ghosts with them seems like a unique and exciting way to explore that theme. I also like fucking around with the human body.

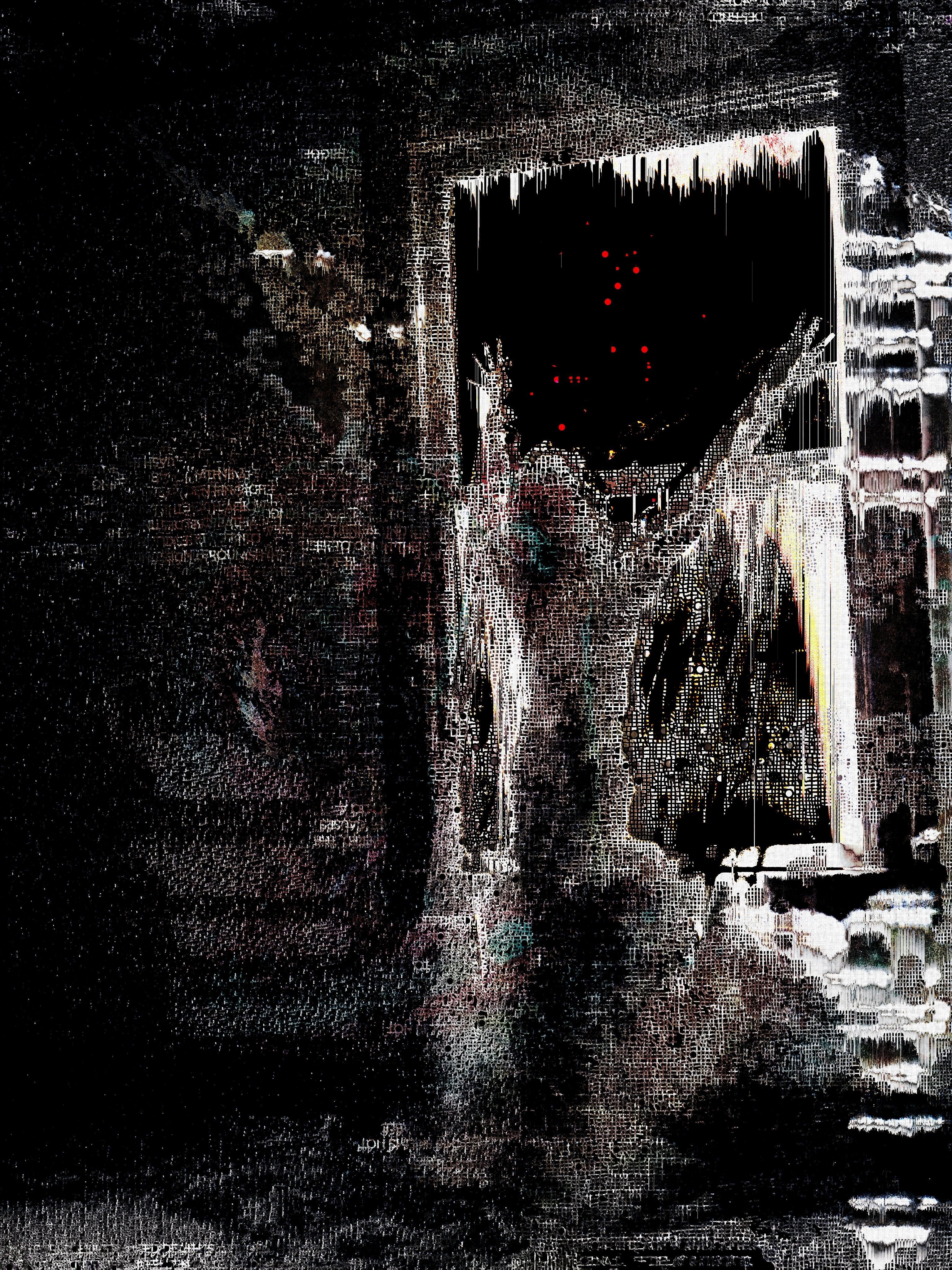

↑ help.exe.

Which piece are you happiest with – and which one surprised you the most?

images_ai: If there’s any work of my own that I think best communicates its own themes with the nuance and emotion intended, it’s one called “untitled (angel)” from back in January. I used the artifacts introduced by Disco Diffusion to tattoo and scar a real photograph of a shirtless man that was then cropped at the waist, and then I used the same process to create a halo and a pair of wings. The background is a Looking Glass generation produced after feeding the machine a series of newspaper covers about the AIDS crisis, which was then overlaid in the final product by a Ronald Reagan speech and some splashes of paint that stick out around the angel and void his eyes. This is also the piece I’d most want hanging on my wall.

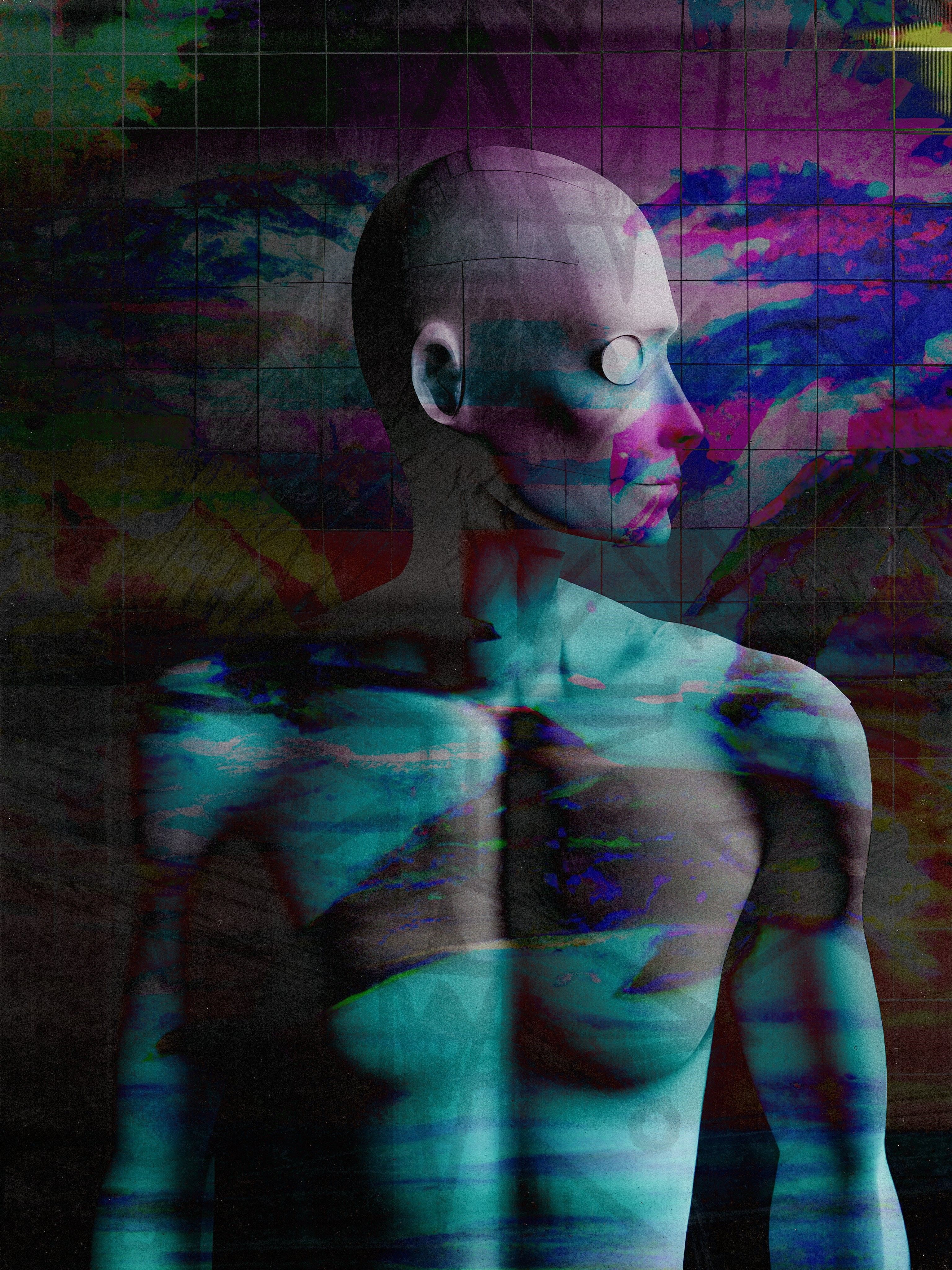

↑ untitled (angel).

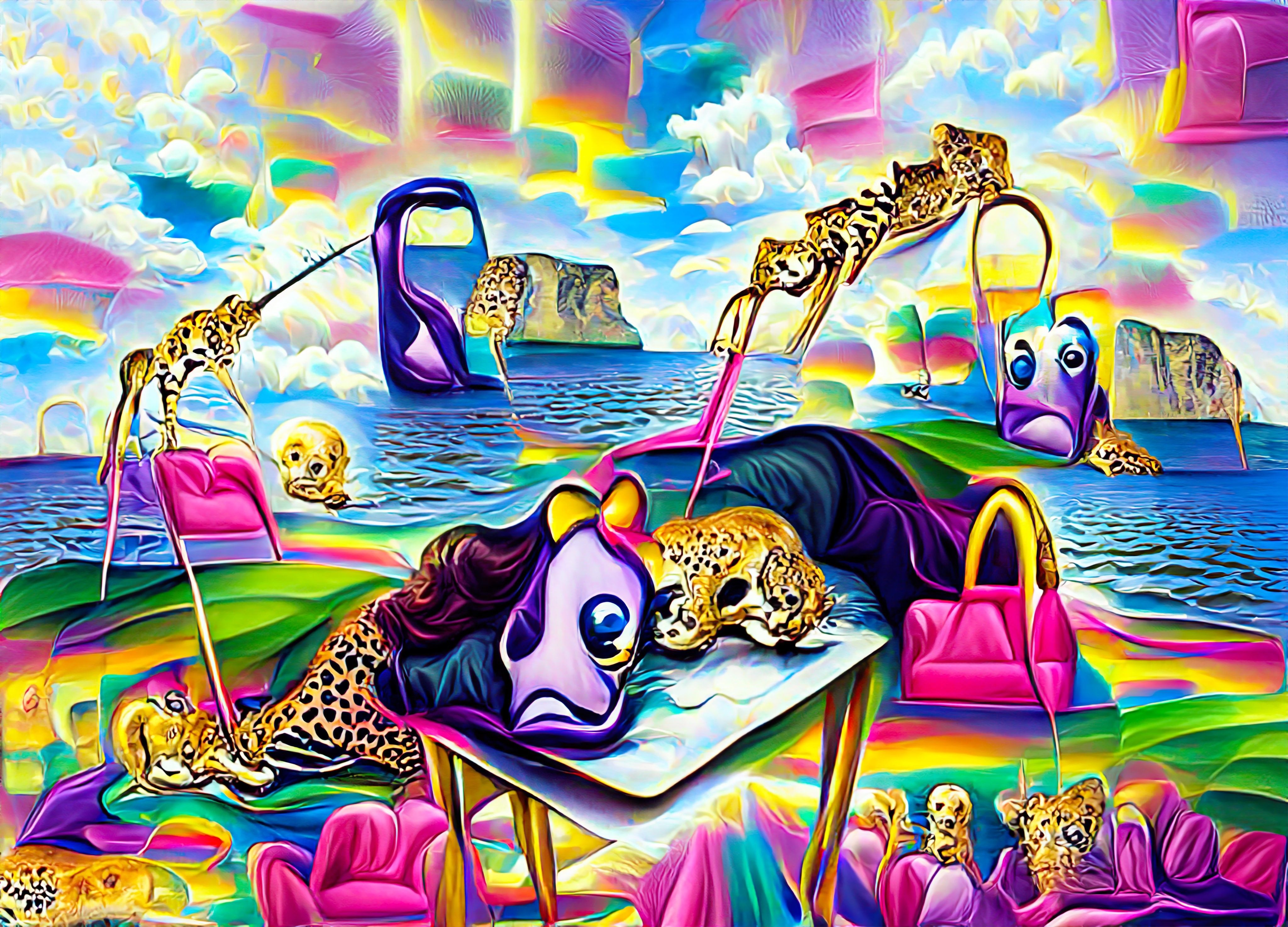

The most surprising piece is obviously “the persistence of memory by lisa frank,” which is the singular post that launched the account so quickly and so suddenly. I remember I left VQGAN+CLIP running with that prompt overnight, I think in the end it ran for something like 8000 generations before stopping. Honestly, I’m still shocked that a VQGAN composition was able to achieve that much structure without even an initial image. You can sort of see the lineage between the original Dali painting and this piece, even though I didn’t incorporate the Dali into its generation. That got posted only 8 days into the account.

↑ the persistence of memory by lisa frank.

What are your favourite notebooks and what other tools do you use - AI models, software, hardware etc?

images_ai: I listed most of the AI tools I’ve employed in the past above, but at the moment I mostly make art by generating assets in either DALL-E or another, more painterly diffusion model (it was Disco, then Midjourney, and now very recently Stability) and then combining them with other materials, overlays, photographs etc. in Photoshop. I use AI image upscaling all the time, usually imglarger.com if I want something neutral and clean, or bigjpg.com if I think the image also needs some denoising. For video material I mostly use Premiere Pro.

DALL-E is generally very versatile, and great if you want emulations of photography. Especially if you ask for something out of focus, grainy, expired, blurry or abstract, you can achieve results that might be genuinely indistinguishable from real photographs, and with all the specificity, particulars and random variation inherent to AI generation. It’s pretty good at making textures too.

↑ DALL-E images, from the prompt “Hazy Grainy Blurry 35mm Film Photograph by Saul Leiter, 50mm lens, of a beautiful woman with a lovely face in a flowing white sundress with a cherry blossom in her loose tied back hair looking sideways off into the distance on a cold foggy day on the moors. Abstract. Experimental.”

Stable Diffusion is generally better when it comes to emulating a lot of painted art styles, or content that you can’t achieve with DALL-E’s very strict censoring. Even slightly older models like Midjourney or Disco are really useful if you want something more abstract and obviously AI-generated. It’s crazy how rapidly we’re exiting the Uncanny Valley. @KaliYuga_ai’s recent fine-tuned diffusion models for Pixel Art, Watercolors and Textiles have produced some stellar results. I like using the Watercolor model for post-processing DALL-E material.

Looking Glass is fascinating because it reverses the process somewhat - you feed the machine images, and it produces more images, by transferring them all through the text-related latent space. And of course, my primary workhorse for over a year was the S2ML Colab notebook by @somewheresy which had an unclosed permanent tab on my PC 24/7 for many, many months. It has functionality for both VQGAN and Katherine Crowson’s initial CLIP-Guided Diffusion, and ran in a much more user-friendly UI than most of the Colabs put together at the time. It’s the notebook I wrote the VQGAN tutorial for.

There’s generally no hardware involved in my art-making process at all.

What’s your process? Please can you talk me through the creation of a piece from initial idea to finished artwork.

↑ What Was That You Said?

images_ai: My process typically works one of two ways. Sometimes, I’ll have the concept for a fully-realized piece already in my head. At that point, I’ll usually try to form the materials as quickly as I can with a more advanced generator until I have a solid base, and then piece the art together bit-by-bit until I have what I want. Other times, I’ll arrive at the art through jamming and experimentation, starting either with a photograph from Unsplash or a DALL-E result, which I’ll overlay and mess around with for hours until the piece reaches a natural conclusion. Flattening the work in Photoshop, fucking around with the Content-Aware Fill, and then layering even more stuff on top, flattening it again etc. etc.

↑ What You Know Is Better.

I use a lot of noise. Film grain overlays, concrete overlays, old photograph and vinyl textures, gaussian generated noise - usually with the intention of emulating old media, like book covers, album covers, oil paintings. I don’t often enjoy artwork that comes off as too clean, especially when the artwork is digital. The introduction of more layers, more artifice, I think allows for a greater capacity for meaning. Plus, the addition of texture can grant your piece a degree of realism that might sell it better as a singular, cohesive work of art, instead of a collage of digital assets - sort of like a glue or a gel, or a bus compressor in music. It’s essentially polish. Dirty, grimey, noisy polish.

↑ Bodies Tend To Break.

There’s been controversy recently about AI art and copyright, especially with the release of powerful models like Stable Diffusion. What’s your take on this issue?

images_ai: Complicated and nuanced, unfortunately. The issue has many sides derived from many different parties and positions, which can also make it difficult to discuss. I’m going to write about this elsewhere in much longer form, but to put it simply my take is this:

Copyright, as a means of ensuring that an artist has the exclusive right to reproduce and recreate their own work, is a framework and a structure that exists in tandem with capitalism, and it only finds real moral value in redistributing wealth and material goods to artists who need it from people and companies who don’t - and it usually doesn’t do that. As with any other law, copyright is not fundamentally moral, good, or useful to everyone, and almost always gets used in reality to punish people with good and interesting ideas that pertain to existing media for the benefit of corporations.

As I stated above, there’s an intrinsic hauntological element to AI image generators, because they exist as digitized conceptions of huge swathes of existing historical imagery, much of it copyright protected. And so, as with any law, moral considerations should be made on a case-by-case basis about whether an individual’s use of AI generation is useful, interesting, creative, transformative, and original, in addition to whether their work affects the material conditions of any person, artist or not, and whether those actions are justifiable. I’m 100% comfortable stealing from corporations, major record labels, and world-famous multi-millionaire painters to make transformative, purposeful, engaging art on Twitter or Bandcamp.

I’m 0% comfortable stealing from small-time artists whose work I’m not transforming at all and that I’m selling for a high price for my own gain, and who might be out of a job because of my actions. Every single other case exists somewhere on a spectrum between those extremes, and there are many utilitarian considerations to make when determining whether the use of an AI machine to emulate a particular artist or work is moral - but these considerations exist separately from copyright, which should only ever be a means to realize actual justice and compensation, and not deferred to as a blanket moral arbiter for how art should and shouldn’t be made. A huge amount of incredible, influential, world-changing artwork has come from the infringement of copyright law.

Whether these AI generators actually do reproduce existing artwork is a topic of much discussion - after having studied the way this process operates for at least a year, and having had these observations verified by some of the developers who built them, I’m confident in making the assertion that at the very least, the presence of any given copyrighted work from the training set within a final output is granular enough that its identification is not possible, so long as the model is actually functional and not overfitting.

Should artists be worried about these tools?

images_ai: A lot of concept artists are worried right now about their jobs being automated by AI generators. The fact that those generators might be trained on their own work, which is a process they didn’t consent to, rubs a lot of salt into that wound. Redundancy means retraining in another field, or unemployment - and unemployment for many artists means suffering and/or death. Being an unemployed and occasionally homeless artist myself, I have unending sympathy and empathy for artists who might end up in this position, and it’s frustrating that so many people assume the artists and devs in this community don’t care about this issue, not least because a lot of people making art with AI saw this coming years ago and warned about it. The tech leading up to DALL-E was in the pipeline for the better part of a decade.

While I don’t consider myself a pessimist, I think it’s worth being realistic about the ways in which we as artists and workers can resist and fight back against capitalist forces. The truth is, as soon as it became clear that the computing power and storage space existed to train a program that made associations between written text and visual images, a future where corporations tried to advance this technology to a point where they could put people out of jobs in favor of automation became certain, and these are the same corporations who have the US legal system and copyright law at their every beck and call. Being able to sue artists who make original content with existing IPs is good for Google. Being able to take the copyrighted work of artists, compile them into a dataset, and then train a model that Google can use to put concept artists out of a job is good for Google. And thus, the law was rendered so.

I think trying to fight back at them through this process, by claiming copyright infringement, is likely going to be extremely difficult and maybe not beneficial for artists in the long run, although any policy that makes it harder for Google to exploit workers is a policy I’m going to support. The question would then become whether any artist using a copyright-infringing model is therefore also consequently bad - and I think the answer to that question is going to be usually no, for the reasons I stated before.

This is why I emphasize the importance of context in the moralization of these models. It is absolutely the case that big tech should be regulated as much as possible, and compelled to only use models trained on public or licensed material if they’re going to train a model at all. Pretty much everyone in the AI Art space supports the idea of a public domain dataset. But the question should always be, who is using this software, why, and what does it affect? Is it morally wrong for an independent artist to use copyrighted material to create original, transformative art that doesn’t affect the career or conditions of the artists whose work trained the model? No. Is it morally wrong for Google to train a model on copyrighted work to create derivative, uninspired work without paying for the labor of human artists who honed their craft for years? Yes! And these two sets of actors don’t typically overlap.

This is why it seems so strange to me that the target of these concept artists’ ire has been Stable Diffusion and the artists who use it, who aren’t putting anybody out of a job with their work. Certainly right now, big tech companies are not using Stable Diffusion, and even if this model didn’t exist in the public domain, Google and OpenAI would train their own for commercial use anyway - they both literally have done that, and they have copyright law on their side too. We should be emphasizing policies like unionization and strike action, establishing mutual aid funds, pushing back against the exploitation of labor by corporations on all fronts. And the fact that we do have something like Stabile Diffusion in the public domain means that, in the worst-case scenario where artists are forced to either retrain or die, we’ve already been developing freeware that might allow them to retain some aspect of artistic freedom and expression in their work, within the capitalist nightmare we all live in. I honestly don’t believe we’re at odds on this issue at all.

What opportunities do you think these tools will bring?

images_ai: Very many. Accessibility is an underappreciated value in the art world, but anything that makes it easier to take an expression you hold in your mind and translate it into something real is going to be a net good for artists, especially artists who for various reasons might not have the ability to create art by more traditional means. Speaking as someone who has worked in digital art, the ability to generate the textures and materials I need in a few seconds instead of sourcing something from elsewhere and manipulating it for hours is a huge benefit. I can put together a professional-grade piece that I’m happy with and looks exactly how I pictured it in less than an hour. That’s crazy. I think we’re going to see some really fascinating technologies pop up once Stability Diffusion goes public and gets integrated into other platforms. I’d love to see some compatibility with the Neural Filters in Photoshop, for example.

Where do you see the future of these tools going, especially in relation to the art world?

images_ai: It doesn’t seem like advances in computing power and storage space are going to slow down any time soon, which means the capacity for AI generation will only increase. If we were to extrapolate forward based on the amount of ground covered in the past year alone, there’s very little I’m willing to say is off the table when we discuss what might come to pass one, two, five years from now.

I think we’re very quickly approaching a grade of diffusion engine where the results are actually, genuinely indistinguishable from original human work. As soon as CLIP-equivalent models are trained on other forms of media, too, we’ll start to see AI generated content popping up there. I’m interested to see how long it will take before we have a functional model for AI-generated video clips based on captioning - presumably the captions would need to be much more detailed, or maybe time-sensitive; have CLIP caption a video frame by frame, and have parameters fade in and out over time, or something. An AudioCLIP model for captioned sound samples also feels within the realm of possibility. Take a database of tagged sounds like Splice, for example, and you could put together a really interesting diffusion engine for creating original sounds.

The possibilities are sort of endless. The GPT-3 Codex model is absolutely blowing my mind right now. We’re going to have AI generated video games very soon. I don’t think we’re far off from having text-to-code models capable of creating and training their own iterative, improved text-to-code models. And then we’ll all be truly fucked. It’s extremely exciting and quite scary.

What’s next for you and what are you hoping for?

images_ai: I’m trying to find work at the moment, so I’ve been pushing for more commissions. It would be great to see some of this artwork appearing on bigger music releases for example. It looks like Lil Uzi Vert’s recent single had an AI-generated cover, which is cool. We also have some super exciting releases on the way at Abstract Image Records, which is dedicated to promoting and distributing work by underground experimental, ambient, and dance music artists. The record store is a democratized art gallery, after all. Cover art is a great place for innovative artwork to shine.

I’d like to write some more, and try to work towards a more substantive distinction between the different schools of art that have formed in the wake of this technology. It really seems like 'AI Art' is something of a misnomer, or at least it’s currently functioning as an increasingly stretched umbrella term for a whole variety of different practices, methodologies and styles, which all happen to be employing the same pieces of tech.

It would also be cool to do some more video work. I ran some VQGAN animation tests a while back, in addition to a collaboration with The Exploring Series on an SCP poetry reading, but the technology has advanced leaps and bounds since then.

Who else should I speak to and why?

images_ai: Obviously @ai_curio. They're also charming to speak to. Can't recommend them highly enough. Ryan Murdock and Katherine Crowson are the OGs in this game, and they’re both extremely intelligent and interesting thinkers and artists. Kali Yuga is doing some incredible stuff in the world of model training, and her own art is sublime. Ted Underwood and @somewheresy are both very erudite. Vito from DMT Tapes FL is who got me into this game, and he’s now dedicated his Twitter full-time to creating AI art at @DMTAI_FL. He’s always worth listening to. The work of Glenn Marshall, was some of the first video-based AI art I came across, and it still looks great over a year later. I don’t think he talks a whole lot about process on Twitter, so an interview would be super cool.

My favorite art coming out right now is from a kid with the handle @FlaminArc, it’s an incredible fusion of Y2K, emo, nightcore, hyperpop digital art that I immediately fell in love with. It’s so distinctive and unique, I’d love to hear where they get their influences from.

@proximasan, @KyrickYoung, @EErratica, @sureailabs and @yontelbrot are all doing incredible work in both art generation and research. It might be worth interviewing them as a group about the Artist Studies project they’ve been working on for months now. It’s an invaluable resource for Stability and Disco users.

The account @multimodalart runs a newsletter for AI Art which is always super informative. I’m sure their operator is worth talking to.

One of my favorite musicians is a producer named Galen Tipton, and I’ve created several pieces of cover art for her. She just released her first pack on Splice, and last I heard she was starting to employ tools like DALL-E and Jukebox AI to create original artworks too. Might be worth hitting up for a semi-outsider perspective on this scene and its artistic applications. Nat Puff AKA Left at London also seems to have taken an interest.

The musician and tech wizard Holly Herndon has been creating super innovative and absolutely stunning art with AI in various forms for years and years. I’m also absolutely fascinated by her creative process. You should absolutely reach out to her and see if she wants to chat.

The operator of the Mutant Mixtape series of charity bandcamp albums has also been a long time supporter, and has some really interesting perspectives on the artistic merit of AI artwork. Definitely worth a chat.

There was a pretty well-received album from the band Everything Everything called Raw Data Feel which used a whole lot of cover art featuring VQGAN generations. From what I can tell, nobody has asked them about this, but it’s probably the highest profile use of this art style I’ve seen outside of that Lil Uzi single. Maybe you could interview them about that project and how they chose to incorporate VQGAN into their cover art. I’d imagine it ties in conceptually somehow.

Some of the highest profile AI art applications have come from the San Francisco influencer Karen X. Cheng. If you could snag an interview with her that would be awesome, not least because it seems like she has access to some seriously cutting edge tech.

@Lost_letters_ and @deKxi also make some great stuff, would love to hear their perspectives.

It would also be very interesting and worthwhile to hear some genuine outsider perspectives on this technology from other artists. Simon Stålenhag has expressed both interest and reservations about the use of this technology. Kris Straub, the artist responsible for the incredible Local 58 web series has been a supporter too, I’m sure he’d have something really insightful to say! The concept artist Karla Ortiz has expressed some serious concerns about consent in datasets as a non-AI artist. She seems very willing to engage in a useful dialogue about this. I was considering reaching out to interview her myself, but it might fit better as part of your series instead.

Please do follow images_ai on Twitter, visit their website and check out their Bandcamp page.

Unlimited Dream Co.

Unlimited Dream Co.