A creative and live action director, Makeitrad has been working in the commercial design field for around 20 years. His work is an innovative mix of 3D animation, StyleGAN interpolation and detailed Diffusion images that combine synthetic and organic forms.

I caught up with him via email to discover more about his process, what he's working on, how he discovered AI art and what it means to him:

Please tell me a bit about yourself – what’s your background and what do you do when you’re not making AI art?

Makeitrad: I’ve always been a bit of a tinkerer. I love to find out how things work and take things apart. I worked for an ISP in the early days of the Internet and my love for UNIX/Linux has stayed with me all these years. I love electronics, which led me to the hobby of HAM radio where I hold an Amateur Extra License here in the USA, and I build projects on the Raspberry Pi for fun.

When I’m not playing with AI art I can be found cycling in and around the Santa Monica Mountains, or out on hikes with the family.

How would you describe what you do?

Makeitrad: I feel like I’ve always been in a battle with computers and technology – I recognised this from a young age and it's definitely reflected in my art. I love the juxtaposition of the computer and nature and the visuals that come along with it.

I also have love for mid-century architecture and furniture, which along with rocks and minerals are often featured in my work.

What are you working on right now?

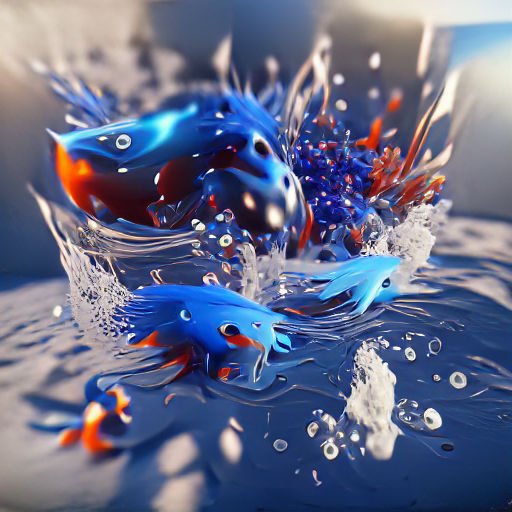

↑ Wonder Globes.

Makeitrad: I’m currently learning to train Diffusion models with the awesome guide by @KaliYuga_ai and the notebook by @devdef.

I've thought that having my own models to make unique work would be the ultimate creative tool since I first started playing with AI, so I began by learning how to train StyleGAN models and I'm now starting to play with Diffusion models.

↑ StyleGAN training.

I like to combine the two by using the outputs from my Diffusion models to train new StyleGAN models, which I later use for interpolations. I've found this technique to be incredibly useful when making images tailored for StyleGAN datasets.

How did you first discover AI art?

Makeitrad: I first saw AI work in April 2021. If I remember correctly, the first two people I saw making things I liked were Unlimited Dream Co. and Jeremy Torman. I reached out to both via DM to get a little insight into their work. Both responded kindly and were willing to give me a few pointers.

When was the moment it first clicked for you?

↑ Early VQGAN+CLIP experiments.

Makeitrad: I was scared to pursue AI art as it was new and it seemed strange and foreign to me. But when I opened my first Google Colab Notebook, everything changed. I was immediately hooked and I don’t think I left the computer for eight hours. I didn’t show anyone my AI work until I figured out how to animate it.

The first piece I minted was 'Monochrome Crystals' on the Hic Et Nunc (now Teia) NFT platform.

What’s your preferred toolkit?

Makeitrad: My favorite text-to-image notebook is @huemin_art's JAX Diffusion Notebook using the CC12M model. I love the unpredictability and the unexpected results I get from it. It can be incredibly versatile, giving me very abstract or photorealistic visuals. The new auto-stitch feature allows me to create images that are extremely high in detail and resolution.

I’m also having a great time with both Disco Diffusion and Warp Diffusion. Warp has been especially interesting as I can make objects in 3D and then use them as my initial images. My AI work can then track to the 3D objects and I can create perfect loops.

Lastly, I love to play with StyleGAN and StyleGAN-XL. While somewhat old technology at this point, the interpolations created using StyleGAN can’t be achieved any other way. It's still magical to me.

I work on a Mac, so NVIDIA graphics cards aren’t something I can use natively. I generally use Google Colab Pro+ with the hopes of receiving A100s at random. I sometimes rent machines at Jarvislabs.ai to do more intensive training, or if I’m running short on time.

What’s your creative process? Talk me through the creation of a piece from start to finish.

Makeitrad: As I said earlier, the ethics of AI work have been a tough subject for me. I want my work to be original and look like something that's not been seen before. I don’t want you to look at my work and say that it looks like so and so’s work.

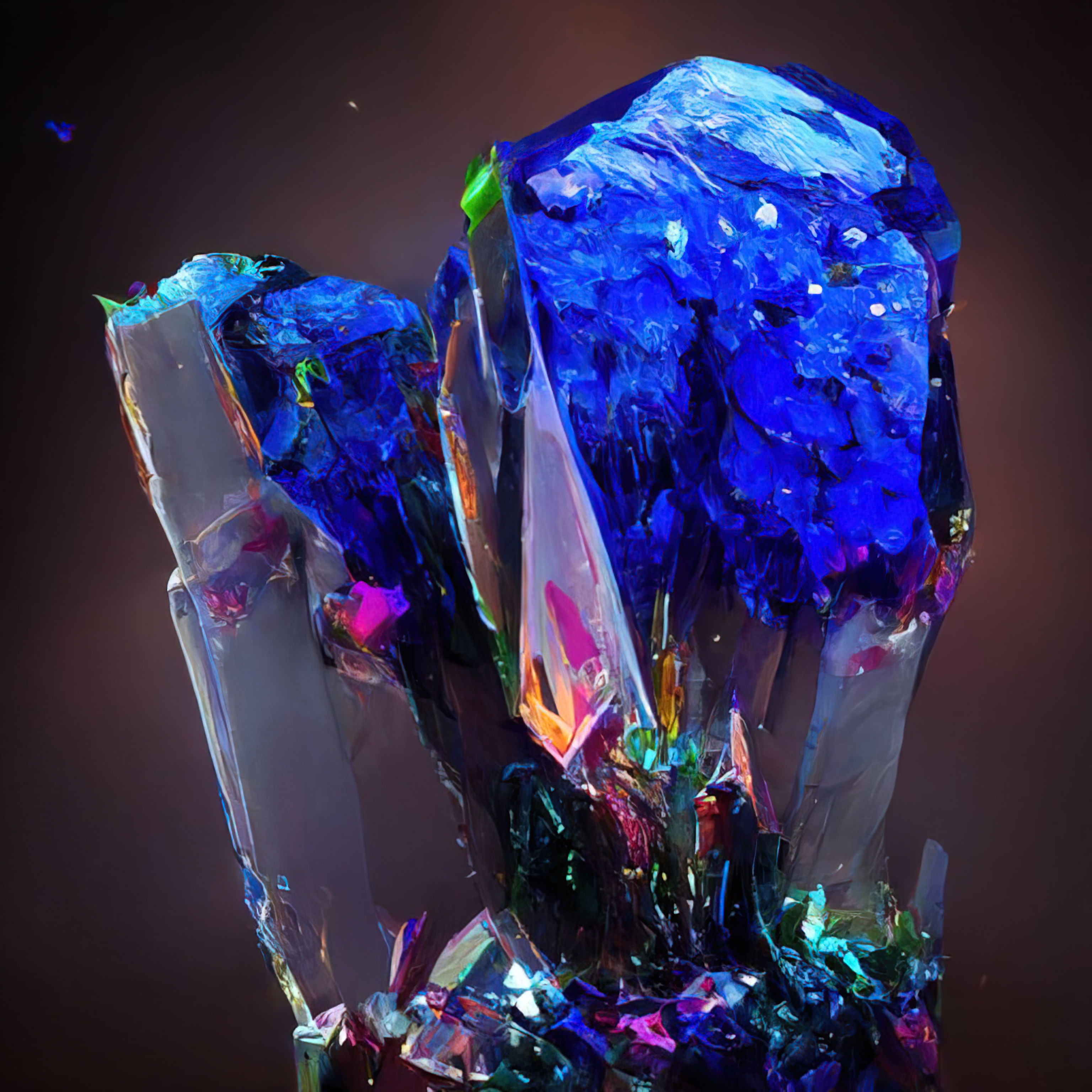

While making the CRYST-AI-Ls I ran into this issue, but by connecting together a few different AI processes, I felt much better about the results. First, I started with a fairly generic dataset of crystals and other minerals. Using them alone to train a StyleGAN model felt too much like the original photographs, so I ran my dataset through JAX Diffusion.

↑ Crystals + JAX Diffusion.

With @Somnai_dreams showing me how to use initial images as guides and @_johannezz building me a custom batch input notebook, I was able to run my entire dataset through JAX giving it a very distinct illustrated look.

I already knew how to use StyleGAN, thanks to @dvsch's Youtube videos and advice from @TormanJeremy, so I took my dataset into StyleGAN 3 and let it train for a few weeks.

When I saw the first interpolations, I knew I had something special. They were beautiful and moved with grace. I then took the collection one step further and used AI to help write the descriptions for the entire collection.

Which artwork are you most happy with?

Makeitrad: I’m most happy with the projects where I play with my passions. Architecture and the natural world really excite me. I have a few projects where I’ve combined the two together as well.

My 'Solitude' and 'Indoor Outdoor' collections do a good job of representing this, and it was really cool seeing my 'Exploring Noise' series 50 feet tall in Times Square. I'd love to do more shows and installations.

↑ As seen in Times Square.

And which piece surprised you most?

Makeitrad: Definitely my first $ASH drop for CRYST-AI-LS. I minted them around six in the evening and when I went to bed none had sold. By morning, I had a few DM’s from Murat Pak asking about my process, and after a nice conversation they bought six pieces. The collection sold out seconds later.

↑ CRYST-AI-LS.

It was an amazing introduction to the $ASH community and real confidence booster. This was my first StyleGAN 3 model and while I was happy with it, I was also self-conscious about the work. There were many new things in this collection, including my first Manifold smart contract. I was charting unknown territory, which is always scary.

What and who inspires you – where do you go for inspiration?

Makeitrad: I probably pull from the same places as everyone else: Twitter, Instagram, Pinterest, TikTok etc. Having said that, I taught at CalArts for just over 10 years so I was always drawing inspiration from my students as well as co-workers.

I’m in an industry where I’ve had the amazing opportunity to work with so many of my idols in the design world. I also know my design history well and can usually find references pretty easily if and when I need them. Beyond that it's just a lot of tinkering, trying out new things and talking to others online.

What does AI art mean for you?

Makeitrad: I think AI art is the future. We’ll go through some growing pains, but I can’t see how this technology won’t be used widely. I’ve already been given boards from agencies made in Midjourney. It’s only a matter of time until it becomes mainstream, so hopefully it'll lead to better, more conceptual work.

There are already a ton of tools for artists from companies like Topaz that help with mundane tasks like resizing, denoiseing, and enhancing video. You can go to RunwayML and rotoscope footage with a button click.

These tools are already changing the post-production industry and the creative industry will be next, but in the end it’s all about the artist's ideas and I don’t think AI will be able to replace humans anytime soon. Being able to iterate and explore your ideas in such a rapid cycle is a game-changer.

What’s next for you and what are you hoping for?

Makeitrad: That’s a tough question. Had you asked me this last April I wouldn’t have been able to tell you anything about AI. The field is moving so rapidly, I have no idea where we’ll be in the next six months.

If I had a video card I'd definitely want to try out Nvidia’s NeRF. As a filmmaker this looks absolutely incredible – it’s like photogrammetry on steroids. Being able to take a few photos with my phone and create full flythroughs of environments with a 3D camera in just a few hours is mind-blowing.

↑ ChaiRs.

In the immediate future I have a couple of projects I need to mint, so ChaiRs and my new Crystal series will be dropping soon. I also just launched a new website to show off all the projects I’ve been making over the last year.

I’m still looking for awesome ways to use AI and 3D, so stay on the lookout for that. I’ve also been submitting my work to more shows and events - there's nothing like seeing your pieces in person!

Please do follow Makeitrad on Twitter, visit their website and check out their NFT collections.

Unlimited Dream Co.

Unlimited Dream Co.